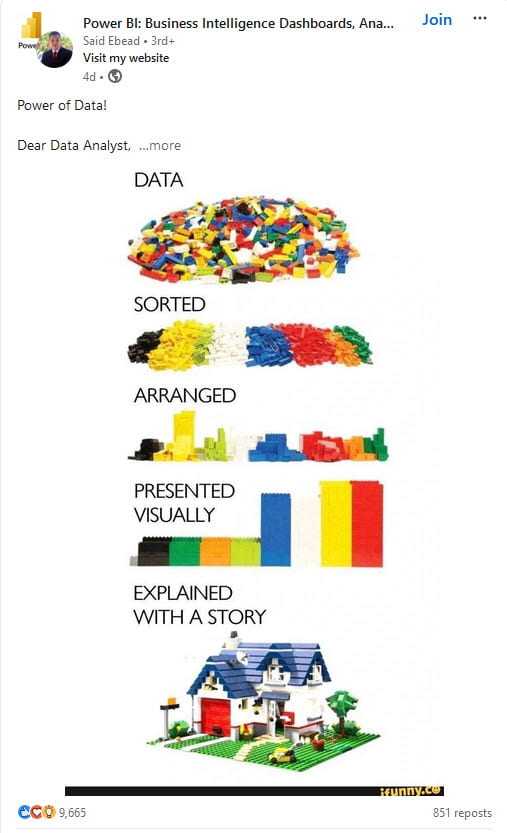

I have been posting the past few weeks about my exploration of AI…in a way, of trying to make meaning from all the data I have been reading. So I noticed this image from Said Ebead on LinkedIn this weekend…and it brought to mind the difference between data and meaning.

Unrelated…yet maybe not…was an article by Lakshmi Varanasi regarding a fired OpenAI researcher who published a long essay on the future of AI. She used ChatGPT to summarize this essay:

“Leopold Aschenbrenner’s essay discusses the transformative potential of artificial general intelligence (AGI) and superintelligence, and forecasts significant advancements in AI technology in the near future. Aschenbrenner, formerly associated with OpenAI, outlines how the progression from current AI models like GPT-4 to AGI could occur much faster than anticipated, based on trends in computational power and algorithmic efficiency.”

She (and her ChatGPT) noted four takeaways:

Rapid Progress in AI

“Aschenbrenner argues that AI development is accelerating at an unprecedented rate. He predicts that by 2027, AI models could reach the capabilities of human AI researchers and engineers, potentially leading to an intelligence explosion where AI surpasses human intelligence.”

It is interesting that back in 2005, Ray Published his book The Singularity is Near…predicting that this would occur by 2045. He apparently is coming out with a new book this month – The Singularity is Nearer!

Economic and Security Implications

“The essay highlights the immense economic and security implications of these advancements. Aschenbrenner points out that trillions of dollars are being invested into developing the infrastructure needed to support these AI systems, such as GPUs, data centers, and power generation. He also emphasizes the critical need for securing these technologies to prevent misuse, particularly by state actors like the CCP {Chinese Communist Party}.”

Technical and Ethical Challenges

“The essay discusses the significant challenges in controlling AI systems smarter than humans, referring to this as the ‘superalignment’ problem. Managing this will be crucial to prevent catastrophic outcomes.”

Predictions and Societal Impact

“Aschenbrenner suggests that few people truly understand the scale of change that AI is about to bring. He discusses the potential for AI to reshape industries, enhance national security, and pose new ethical and governance challenges.”

She closed by noting that Aschenbrenner stated that there were probably only a few hundred people, most of them in AI labs in San Francisco, who had “situational awareness” about the industry. An interesting term…and I would not be sure that I even begin to have situational awareness!

All very interesting and related to my past weeks’ explorations.

But what struck me was her use of GenAI to make sense of a long essay…in essence to move from data to a story…much like the image suggests in the difference between a pile of Lego blocks and a recognizable home. To me, this is an effective use of AI that would benefit academics…and their students.

Fifteen years ago at an academic conference, Mike Wesch shared a process he used to get graduate students to read hundreds of articles. It was pretty simple…he divided up the articles among his students and had them post summaries. Students were then responsible for the collective summaries rather than the entire articles.

How times have changed! Now, GenAI can handle the task of summarizing…in effect taking a lot of data and making stories of that data. Think about how this might change research methodologies and learning strategies?

Pretty exciting…but it also raises ethical considerations, such as impacts on critical thinking and depth of understanding. As I noted in my last post, without critical thought to prompts, GenAI could simply dumb down academic reading.

One of my favorite books thirty years ago was Paradigms by Joel Barker. He discussed how the rules for success in businesses or professions —paradigms—can change at any time. The subtitle to the book was “The Business of Discovering the Future.” Barker in a lively read explained how to spot paradigm shifts, how they unfold, and how to profit from them.

Yet over the past thirty years, few of his predictions came true. Yet, I think that he was spot on in his suggestion that we explore paradigm shifts to make sense of what is to come.

We stand on the brink of what could be another paradigm shift in artificial intelligence, and the implications for education and information processing are profound. The ability of AI to distill vast amounts of data into meaningful narratives – much like the opening image – challenges our traditional approaches to learning and research. While tools like ChatGPT or Claude offer exciting possibilities for efficiency and accessibility, they also raise important questions about the nature of understanding and the role of critical thinking in education. As educators and technologists, we must navigate this new landscape thoughtfully, leveraging AI’s potential while ensuring we cultivate the uniquely human skills of analysis, creativity, and ethical reasoning. As my friend Harold Jarche posted last year:

“Gianfranco Chicco described a need to move from artificial to artisanal intelligence.

In craftsmanship, different kinds of knowledge are already at play. Intellectual knowledge residing in our brain and tacit knowledge residing in our body. What AI adds is the possibility for a different, non-human kind of intelligence to have a role … What often gets lost in the current narrative about AI is the why we do what we do. The act of creation, of bringing something into this world that didn’t exist before is not just a transactional act to favour convenience or reduce friction. We create because it’s a way to manifest our humanity, to understand the world and our role in it, and to connect with fellow humans. Removing that creation from our beings, outsourcing it onto another entity — in this case AI — because it can make it easier or even better is to miss the point completely.”

The future of education may well lie in finding the right balance between AI-assisted information processing and deep, human-driven comprehension.

{Graphics: Ebead, HarperBusiness, DALL-E}