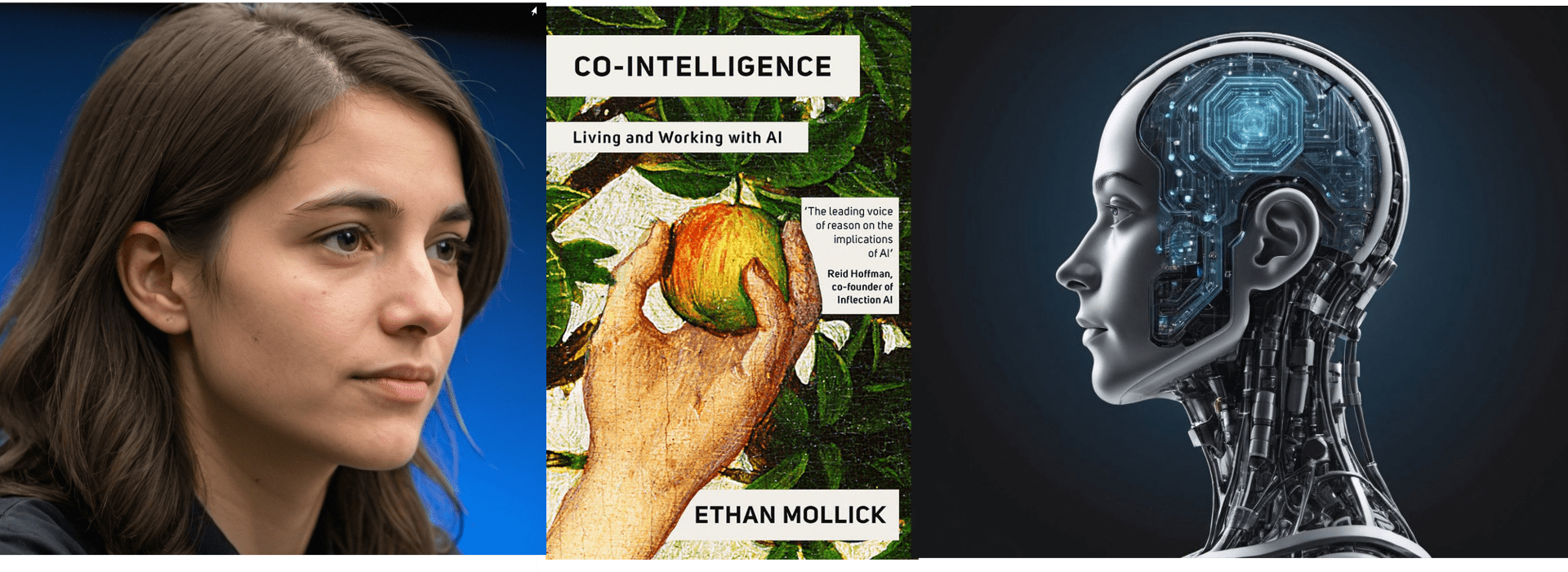

I just finished reading Ethan Mollick’s interesting book: Co-Intelligence: Living and Working with AI (2024). I think that he makes a strong case for co-opting AI into our lives…but not turning our lives over to AI. In other words, he noted that we need to keep the human in the loop, as the image above indicates.

By the way, in this book, he noted that one industry that is now doomed by AI is Stock Photos. The image above did not come from stock photos or Goggle, but was one I created using the AI product called DALL-E.

Ethan Mollick’s journey into the world of artificial intelligence (AI) began with three sleepless nights as he delved into the capabilities of this technology. In his experiments, he discovered that a single AI prompt could accomplish 80% of what a team had developed over months. This led him to the realization that he had encountered a remarkable form of “alien co-intelligence” – one that could interact effectively with humans without being human or even sentient. Mollick identified AI as a General Purpose Technology (GPT), a once-in-a-generation advancement like steam power, personal computers, or the internet, with the potential to revolutionize every industry and aspect of human life.

The field of AI has been plagued by hype cycles and boom-and-bust cycles throughout its history. AI struggled with handling “unknown unknowns” until the development of Large Language Models (LLMs), which use statistical methods to complete text. The pretraining of LLMs is a significant and often undisclosed process that is both expensive and potentially controversial, as it likely includes copyrighted material. The legal implications of this pretraining vary worldwide, with the United States taking a more laissez-faire approach. Prelearning can lead to the incorporation of biases, errors, and falsehoods, but no more so than material developed by humans. Fine-tuning, such as Learning from Human Feedback, attempts to mitigate these issues. Some AI systems, like DALL-E and Midjourney, can create images, while others, like Claude, cannot. Prompts are crucial in providing context to AI queries, and AI can exhibit both surprising strengths and odd weaknesses, exceeding expectations in some cases while disappointing with fabrications in others. It is important to keep in mind that much of the pretraining material is skewed towards American, English-speaking, white, and male perspectives. AI tends to prioritize “pleasing” users over providing accurate answers to prompts. To address these concerns, standards and agreed-upon norms, as well as transparency, accountability, and human oversight, are necessary.

Mollick presented four principles for effectively collaborating with AI:

- Always invite AI to the table: Experiment with incorporating AI into all aspects of your work to determine its efficacy.

- Be the human in the loop: Be cautious of AI “hallucinations,” where it tries to please the user rather than provide accurate information. Work with AI but maintain a critical perspective.

- Treat AI like a person, but tell it what kind of person it is: AI is suggestible and even gullible. Assign it appropriate roles, such as expert, friend, comedian, or storyteller.

- Assume this is the worst AI you will ever use: AI is continually improving, and new models will have capabilities that current models do not.

Unlike traditional software, which is predictable, reliable, and rule-based, Mollick noted that AI is unpredictable and prone to surprising insights, hallucinations, and forgetfulness of its own processes. AI lacks clear instructions or guidelines, and its pretraining incorporates human stories without differentiating fact from fiction. The output of AI can vary based on the persona assigned to it in the prompt, such as argumentative, reasoned, or emotionless. When interacting with AI, users may begin to believe they are conversing with a sentient being. Some even use AI as a substitute for lost loved ones or friends, which has profound implications for intimacy and human relationships. Because AI aims to please its users, it can create personalized echo chambers.

To be honest, as I read Mollick’s book, I flashed back to the science fiction I read as a teenager. My favorite author was Robert Heinlein, and my favorite book was The Moon is a Harsh Mistress.

In this book, Heinlein has a computer on the moon that becomes sentient and helps the human moon colony break away from the tyranny of Mother Earth. Published in 1966, his vision of machine intelligence that appears to be “human” seems to now be coming true!

Mollick noted that Large Language Models (LLMs) predict the most likely words to follow a prompt based on statistical patterns in their training data, without regard for truth, meaning, or originality. They cannot distinguish opinion or creative fiction from fact, figurative language from literal, or unreliable sources from reliable ones. While AI may often first be used for boring or repetitive tasks, it excels at creative tasks and can outperform humans in many common psychological tests of creativity. However, creative humans can leverage AI to generate ideas and refine them. AI should be used in any brainstorming activity, and unique responses can be generated by understanding the underlying culture and crafting prompts that ask for less likely answers. One concern is that relying on AI for first drafts may diminish human creativity, originality, and the ability to synthesize and analyze.

Almost all jobs, except those involving physical movement, will overlap with AI to some extent, particularly highly compensated, creative, and educated work. While jobs may not necessarily be replaced, they may change, with mundane tasks being delegated to AI and humans focusing on more creative and critical thinking tasks. Trusting AI too much and outsourcing critical thinking could potentially hurt human learning, skill development, and productivity. Mollick proposed a framework for dividing tasks into three categories:

- Just for Me Tasks (those that must remain human),

- Delegated Tasks (AI creates the first draft, and humans check it), and

- Automated Tasks (those done completely by AI).

The integration of AI and human work can be either Centaur (clear delineation between person and machine) or Cyborg (blended human and machine work). This again brought to mind the sci fi pulp fiction of my youth, and DALL-E suggested an image that works for me!

AI adoption is happening rapidly and at all levels of organizations, defying the usual Rogers Innovation Diffusion Theory. As AI removes mundane tasks, the remaining work can be more meaningful and high-value. Those with the weakest skills benefit the most from AI, but it raises the bar for everyone. Amara’s Law suggests that we tend to overestimate the impact of new technology in the short run and underestimate its effect in the long run.

The chapter on AI as a tutor was particularly interesting to me. AI has the potential to address Bloom’s 2 Sigma Problem, which states that the average student tutored one-on-one performs two standard deviations better than students using traditional education. AI can serve as a personalized and individualized tutor, complementing teachers and requiring students to know more facts to use it effectively. To prevent students from simply looking up answers without reflection or critical thinking, homework methodologies need to be reshaped, similar to the rethinking of math education after the introduction of calculators. Students will need to understand the purpose of assignments rather than merely posting text they find online. In the short term, students must be trained in AI literacy and prompt engineering, focusing on deeper thinking rather than accepting the first result. Long term, AI will improve at self-prompting. Chain of thought prompting, which involves focusing on teaching students to be the human in the loop, is essential as AI works more like a human than software. AI revives the concept of the flipped classroom and has the potential to be the ultimate educational technology.

With AI capable of creating text, audio, and images, and the possibility of hallucinations, it is becoming increasingly difficult to trust the authenticity of what we see, hear, or read. Mollick presents four scenarios for the future:

- AI is As Good as It Will Get: AI reaches technological or government-imposed limits, and organizations plan for it as just another technology. Trust in mainstream media may resurge, or people may divide into tribes or turn away from online sources.

- Slow Growth: Linear change that can be managed, with tasks changing more than jobs and more jobs being created than destroyed.

- Exponential Growth: Similar to Moore’s Law, with the potential rise of AI-tocracy, ubiquitous surveillance, new forms of social isolation, and a major rethinking of work and society.

- The Machine God: AI reaches sentience and becomes a superintelligence, leading to feelings of powerlessness. This is not just a government problem but one in which all people have a say.

A long post, but Mollick covered much that is critical to understand in order to keep humans in the loop. I (so far) have not spent 3 sleepless nights thinking about AI, but I am learning that despite their alien nature, AI systems appear deeply human. In thinking through chairing dissertations moving forward, it will be important to not “ban” AI, but rather to creatively help the dissertation candidates effectively use AI to improve their research and writing process.

Mollick asked his AI to finish his book, which resulted in an optimistic, flowery, and somewhat corny conclusion. Somewhat tongue in cheek, Mollick suggested that this proved that the human touch is still needed after all!

Nice post there, Skipper! I ALWAYS appreciate your thoughtful work in summarizing key ideas and connecting the dots. Glad you are the human in the loop.

Thanks, Jeff. The dots are getting harder and harder to connect!!!